Build Local Knowledge Base Using LLMs

This post is about building a local knowledge base using Large Language Models (LLMs) and Langchain.

# Intro

Large Language Models (LLMs) are a groundbreaking advancement in the field of natural language processing and artificial intelligence. These models have the ability to understand and generate human-like text, making them incredibly powerful tools for a wide range of applications. LLMs are trained on vast amounts of data, allowing them to learn the intricacies of language and context. They can be used for tasks such as text generation, translation, summarization, and even conversation. With their ability to comprehend and generate coherent and contextually relevant text, LLMs have the potential to revolutionize various industries, including content creation, customer service, and research. As these models continue to evolve and improve, they hold the promise of transforming the way we interact with and utilize language in the digital world.

Using Large Language Models (LLMs) to build local knowledge bases is a groundbreaking approach that combines the power of natural language processing with the ability to store and retrieve information specific to a particular domain or locality. LLMs, with their remarkable language understanding capabilities, can be leveraged to create intelligent systems that not only comprehend and generate human-like text but also possess a vast amount of contextual knowledge. By training LLMs on local data sources such as regional news articles, historical records, or community forums, we can create knowledge bases that capture the unique characteristics and nuances of a specific location or community. These local knowledge bases can then be utilized to provide accurate and relevant information, answer queries, and assist users in a variety of applications, including virtual assistants, chatbots, and recommendation systems. By harnessing the power of LLMs, we can build intelligent systems that not only understand language but also possess a deep understanding of the local context, enabling more personalized and contextually relevant interactions with users.

LangChain (opens new window) is a framework for developing applications powered by language models. It enables applications that are:

- Data-aware: connect a language model to other sources of data

- Agentic: allow a language model to interact with its environment

The main value props of LangChain are:

- Components: abstractions for working with language models, along with a collection of implementations for each abstraction. Components are modular and easy-to-use, whether you are using the rest of the LangChain framework or not

- Off-the-shelf chains: a structured assembly of components for accomplishing specific higher-level tasks

Off-the-shelf chains make it easy to get started. For more complex applications and nuanced use-cases, components make it easy to customize existing chains or build new ones.

Combine the power of the LLMs and the Langchain library, it is now possible to build a local knowledge base application in just a few hundreds lines of code.

# Overall Design of the application

For this application, we are going to build the following components:

- A document loader that will load text chunks from different file types such as pdf, epub, docx, txt, etc.

- An embedding (opens new window) engine to convert text chunks into embeddings.

- A vector database to save embeddings

- Finally, let the LLMs to query the vector database to find the relevant answers to user queries.

# Text Loader

langchain has text loaders for a variety of document format. It also has different text splitters to convert the document into chunks of tokens (opens new window).

from langchain.document_loaders import (

PyPDFLoader,

TextLoader,

Docx2txtLoader,

DirectoryLoader,

UnstructuredEPubLoader,

)

from langchain.docstore.document import Document

from langchain.text_splitter import RecursiveCharacterTextSplitter, TextSplitter

def parse_document(doc: str, doc_type: str = "pdf") -> list:

"""parse document files and chunking into list of Document"""

parse_funcs = {

"pdf": parse_pdf,

"docx": parse_docx,

"txt": parse_txt,

"epub": parse_epub,

}

default_text_splitter = RecursiveCharacterTextSplitter(

chunk_size=500,

chunk_overlap=100,

length_function=len,

add_start_index=True,

)

if doc_type in parse_funcs:

print(parse_funcs[doc_type])

return parse_funcs[doc_type](doc, default_text_splitter)

raise NotImplementedError(f"Document type {doc_type} is not supported!")

def parse_epub(doc: str, text_splitter: TextSplitter) -> list[Document]:

"""parse epub document"""

loader = UnstructuredEPubLoader(doc)

pages = loader.load_and_split(text_splitter)

return pages

# Embeddings and Vector databases

For the embedding engine, we can use OpenAI's embedding API (opens new window).

For the vector database, there are many choices such as FAISS (opens new window), Pinecone (opens new window) and Chroma (opens new window). Here we use Chroma as the vector database.

class KnowledgeBase:

def __init__(self):

pass

@staticmethod

def init_chromadb(docs: list[Document]):

client_settings = chromadb.configure(

chroma_db_impl="duckdb+parquet",

persist_directory="db",

anonymized_telemetry=False,

)

embeddings = OpenAIEmbeddings(client=None)

vectorstore = Chroma(

collection_name="test01",

embedding_function=embeddings,

client_settings=client_settings,

persist_directory="db",

)

vectorstore.add_documents(documents=docs, embedding=embeddings)

vectorstore.persist()

return vectorstore

@staticmethod

def query_chromadb(query: str):

client_settings = chromadb.configure(

chroma_db_impl="duckdb+parquet",

persist_directory="db",

anonymized_telemetry=False,

)

embeddings = OpenAIEmbeddings(client=None)

vectorstore = Chroma(

collection_name="test01",

embedding_function=embeddings,

client_settings=client_settings,

persist_directory="db",

)

return vectorstore.similarity_search(query=query, k=4)

# Query the Vector Database

langchain provides different chains (opens new window) to process different types of user queries through LLMs.

For the LLM, we also used OpenAI's gpt-3.5-turbo model, which is also the default OpenAI chat model in langchain.

For the langchain chain object, we use the load_qa_with_sources_chain for the "ask and answer" type of user queries.

class KnowledgeBase():

async def ask(self, query: str) -> str:

"""query the knowledge base"""

relavent_docs = self.query_chromadb(query)

llm = ChatOpenAI(

temperature=0.2,

client=None,

# streaming=True,

# callbacks=[StreamingStdOutCallbackHandler()],

)

prompt = prompt_template()

chain = load_qa_with_sources_chain(

llm=llm, verbose=True, chain_type="stuff", prompt=prompt

)

result = chain.arun(

input_documents=relavent_docs,

question=query,

return_only_outputs=True,

)

return await result

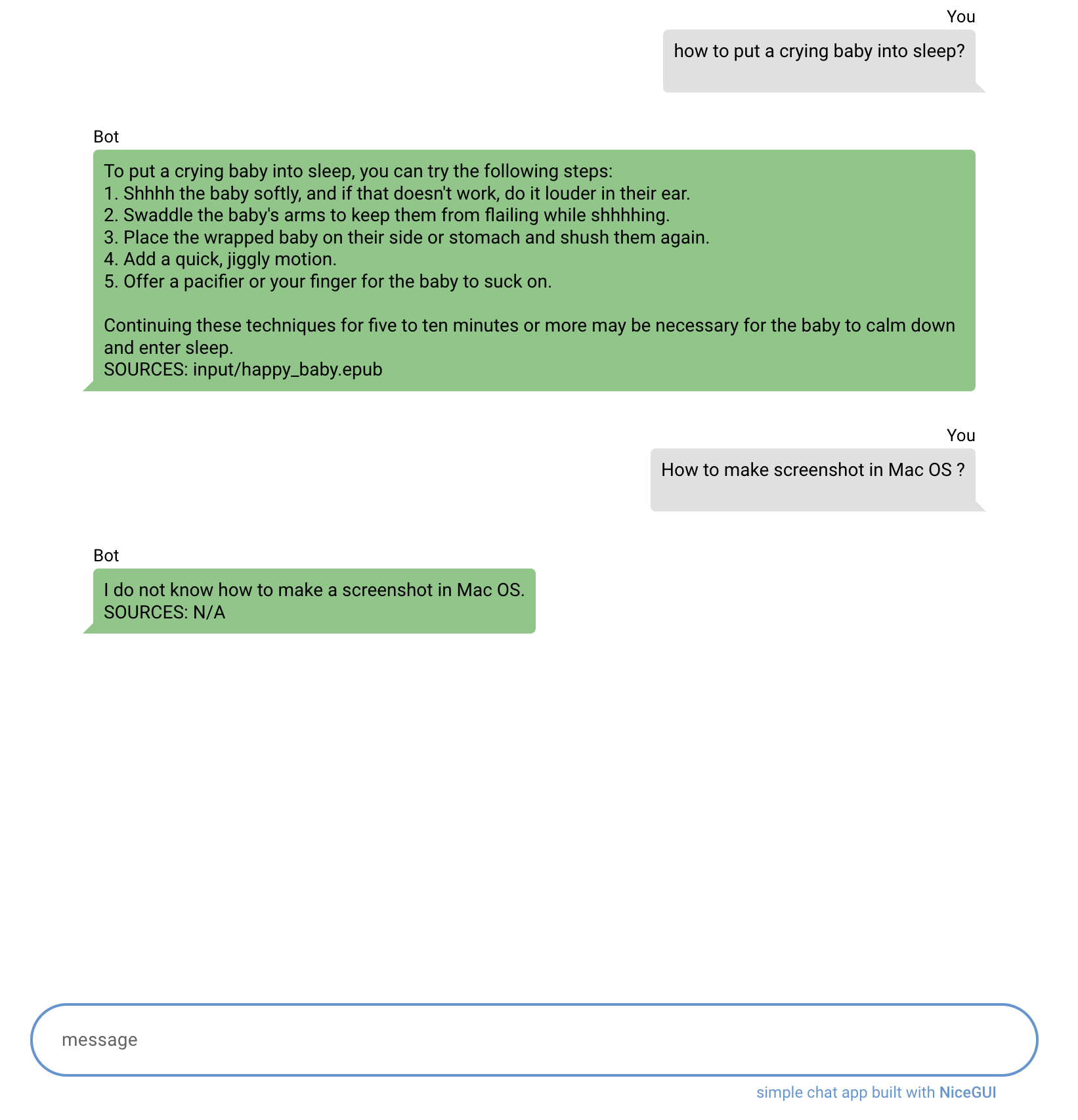

# Final touch - Chat UI with NiceGUI

Lastly, we can use a web UI framework to quickly add a user interface for the local knowledge base.

As we can see in the screenshot below, the local knowledge base will be able to answer user queries using the information in the uploaded documents with a reference to the source document. If a user asks a non-relevant question, it will just answer "I don't know" instead of a hallucination answer like the generic ChatGPT would do.